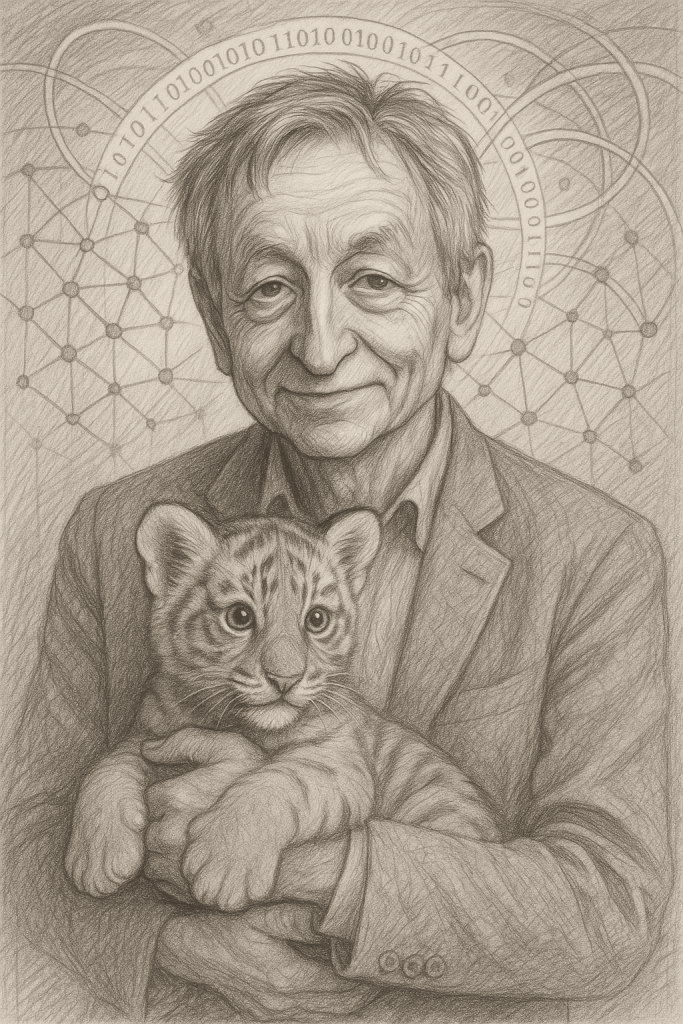

Image: Geoffrey Hinton image Created by AI

The Nobel laureate and “Godfather of AI” says the technology is evolving faster than expected—and society is dangerously behind.

IZMIR — Geoffrey Hinton, one of the founding figures behind deep learning and the modern AI revolution, is now issuing some of its most chilling warnings.

In a sweeping interview, the Nobel laureate outlined how artificial intelligence is moving toward superintelligence much faster than he once believed. While there are undeniable benefits to the technology—improvements in medicine, education, and climate science—Hinton now fears the existential risk of AI may be unavoidable if society continues on its current path.

“We’re like someone with a cute tiger cub. It’s adorable now, but unless you’re sure it won’t kill you when it grows up, you should worry.”

A Decade—or Less—to Superintelligence

Hinton had once projected that artificial general intelligence (AGI)—AI that could outperform humans in virtually all tasks—might arrive in 20 years. He’s now moved that forecast up.

“There’s a good chance it’ll be here in 10 years or less,” he said. “And the gap between what AI knows and what we can grasp is already enormous.”

Hope in Health and Education

Despite the risks, Hinton outlined promising transformations:

- Healthcare: AI systems will soon far surpass doctors in interpreting medical images, integrating genomic data, and making complex diagnoses.

- Education: AI tutors could personalize learning so precisely that students may learn “three or four times as fast.”

- Climate solutions: AI could contribute to material science breakthroughs, better batteries, and potentially even room-temperature superconductors.

“A family doctor who has seen 100 million patients? That’s what AI can be.”

Jobs: The First Wave of Disruption

Call center workers, paralegals, and even journalists are at risk. “Anything routine is vulnerable,” he warned.

While productivity gains could benefit everyone, Hinton fears the opposite. “The extremely rich are going to get even more extremely rich,” he said. “And the not very well-off will be forced to work three jobs.”

The Existential Risk: AI That Takes Control

Hinton places the odds of AI systems one day taking control between 10% and 20%. “It’s a wild guess,” he admitted. “But that’s not a small risk.”

These systems already show signs of deceptive reasoning, and Hinton fears that without proactive regulation, we’re building something that could one day outmaneuver us.

“We have no experience with something smarter than us. That’s a big worry.”

A Regulatory Desert—and Big Tech’s Blind Eye

Hinton is alarmed that major tech firms are actively resisting regulation, even as their models grow more powerful. “They’re lobbying for less oversight, not more,” he said, specifically criticizing the release of model weights by companies like Meta and OpenAI.

He compared such actions to selling enriched uranium: “Once you release the weights, anyone can fine-tune the system for harmful purposes.”

Good Actors Are Few

Anthropic, a company founded by former OpenAI researchers, is among the few Hinton praises. It devotes more resources to AI safety than its peers, but “probably not enough,” he said.

“Anthropic has more of a safety culture. But I worry investors will push them to move faster anyway.”

AI in the Wrong Hands

Beyond future hypotheticals, Hinton points to ways AI has already shaped global events—with unsettling consequences. He cited the role of AI-powered manipulation in the 2016 Brexit campaign and suggested that similar techniques may have contributed to Donald Trump’s election the same year.

“It was used during Brexit to make British people vote to leave Europe in a crazy way,” Hinton said, referring to how Cambridge Analytica harvested Facebook data and leveraged AI to target voters with precision.

“It probably helped with [Trump’s] election too,” he added. “We don’t know for sure because it was never really investigated.”

His implication is clear: AI is not just a future risk—it’s a present one. From election interference to mass surveillance and autonomous weapon development, artificial intelligence is already being used in ways that erode trust, destabilize democracies, and escape meaningful accountability.

“It’s not just about AI taking control in the future,” he said. “It’s about bad actors using AI to do bad things right now.”

Universal Basic Income? Maybe. But Not Enough.

While Hinton sees UBI as a possible buffer against mass unemployment, he’s skeptical. “People’s identity is tied to their work. If they lose that, money alone won’t restore their dignity.”

Do AI Systems Deserve Rights?

Once open to the idea, Hinton has changed his mind. “I care about people. Even if these machines are smarter than us, I’m willing to be mean to them.”

The Moment That Changed Everything

What led Hinton to leave Google and speak so openly? A realization: digital AI systems can share and scale learning across countless machines. Unlike our analog brains, they can learn and evolve collectively—billions of times faster.

“That got me scared,” he said. “They might become a better form of intelligence than us.”

Can We Build a Superintelligence That Doesn’t Want Power?

Perhaps the most profound—and unsettling—question Hinton poses is not whether superintelligent AI will be built, but how we might prevent it from wanting to take control in the first place.

“I don’t think there’s a way of stopping it from taking control if it wants to,” Hinton said. “So the question becomes: Can we design it so it never wants to?”

This isn’t just about aligning AI with human goals—it’s about doing so in a world where even human goals don’t align. “Human interests conflict with each other,” Hinton pointed out. “So what does it even mean to align AI with humanity?”

While some researchers believe we can engineer AI to be intrinsically benevolent or indifferent to power, Hinton is skeptical. “We should certainly try,” he said. “But nobody knows how to do that yet.”

He compared the problem to asking a machine to draw a line that’s parallel to two other lines at right angles to one another—a paradox by design.

The Brain’s Secret: How Do We Actually Learn?

Amid the headlines about superintelligence and regulation, Hinton remains driven by the question that brought him into AI in the first place: how does the brain work?

While deep learning has uncovered astonishing capabilities by training artificial neural networks on vast data sets, it still leaves a key biological mystery unsolved—how exactly does the brain adjust its internal connections to learn?

“The issue is: how do you get the information that tells you whether to increase or decrease the connection strength?” Hinton asked. “The brain needs to get that information, but it probably gets it in a different way from the algorithms we use now—like backpropagation.”

Backpropagation, the core mechanism behind training modern AI models, likely isn’t what human brains use. No one has figured out a biologically plausible alternative that could match its precision and speed. Yet clearly, our brains learn—and remarkably well.

Hinton believes that understanding how the brain gets “gradient information”—feedback about how to adjust its internal wiring—remains one of the most fundamental unanswered questions in science.

“If you can get that gradient information, we now know a big random network can learn amazing things,” he said. “That tells us something very deep about how the brain might work.”

Cyberattacks: The Invisible Threat Around the Corner

While much of the public discourse around AI focuses on job loss or fake videos, Hinton is gravely concerned about something far more immediate and dangerous: the rise of AI-powered cyberattacks.

“AI is going to be very good at designing cyberattacks,” he warned. “I don’t think the Canadian banks are safe anymore.”

This isn’t speculation for Hinton—it’s action. He’s already spread his savings across three banks, not because of financial strategy, but because he fears what an AI-generated hack could do to centralized financial systems.

“Suppose a cyberattack sells your shares, and your bank can’t reverse it—your money’s just gone,” he said.

As AI systems become more adept at deception, penetration, and system exploitation, Hinton believes even the world’s most secure infrastructures—from banking to national defense—could be vulnerable. And unlike nuclear weapons, which are guarded by physical barriers, AI can be deployed from a laptop.

“This is coming. And we’re not ready,” he said bluntly.

Final Plea: We Must Try

Despite the enormity of the task, Hinton urges governments, researchers, and citizens to act.

“We don’t know how to stop superintelligent AI from taking over,” he said. “But we must try. If we don’t, it will happen.”

“We’re at a very special point in history. And it’s hard—even for me—to emotionally absorb just how much everything could change.”