Yuval Noah Harari on the True Risk of the AI Revolution

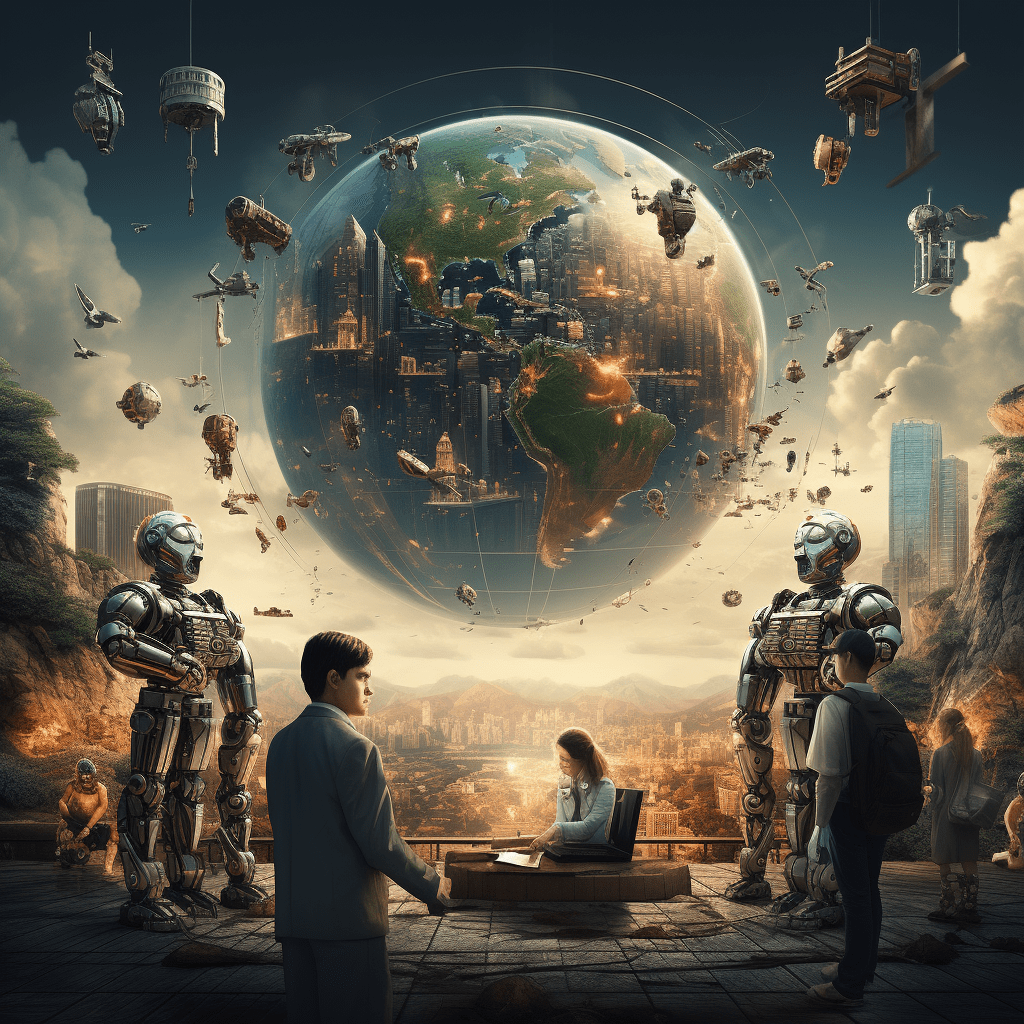

In a candid conversation at Keio University’s Cross Dignity Center, historian and author Yuval Noah Harari laid out a sobering yet hopeful vision for the future of humanity. His warning was clear: Artificial Intelligence may be the most powerful force humans have ever created—but it is our inability to trust one another that makes it so dangerous.

“AI isn’t evil,” Harari told Keio University President Kohei Itoh. “The problem is that it’s alien. It thinks nothing like us, and we have no idea what it will do.”

Harari, known for his global bestsellers like Sapiens and Homo Deus, came to Tokyo to discuss his latest book Nexus, which focuses on the rise of information networks and artificial intelligence. The conversation spanned everything from military strategy to the psychology of trust, drawing a full house of students, academics, and technologists.

From Agents to Algorithms

Unlike previous technologies—tools we could control—AI, Harari emphasized, is an agent. “It can make decisions, create new ideas, even invent its successors. We’ve never built something like this before.”

He compared AI’s development to nuclear technology, but with a crucial distinction: “Nobody sees a bright side in nuclear war. But with AI, the promise of progress blinds us to the risks.”

And the risks are growing fast. In modern warfare, AI is already selecting bombing targets, analyzing intelligence, and directing strategy—tasks previously overseen by humans. “The killing is still done by people,” Harari said, “but the choice of whom to kill is increasingly made by machines.”

The Real Crisis: Human Distrust

Despite the technological upheaval, Harari insists that the deeper crisis is social. “If humans could trust each other, we could regulate AI. But we don’t.”

In every conversation with AI industry leaders—from Silicon Valley to Beijing—Harari hears the same refrain: “We would love to slow down, but our competitors won’t. So we can’t.” The AI race is fueled not by innovation alone, but by fear.

This distrust extends beyond borders. Harari sees the fracture between elites and the general public as another dangerous divide—one that populists exploit and digital platforms amplify. He dismisses the binary framing of “elite vs. people” as a false dichotomy. “The real question is not whether elites exist, but whether they serve the common good.”

Disinformation, Fiction, and the War on Truth

Education, Harari argued, must shift from delivering information to teaching discernment. “We’re drowning in information. But most of it is fiction, fantasy, or propaganda. The truth is rare—and often painful.”

He called for strict global regulations to outlaw “counterfeit humans”—AI bots that impersonate real people—and to hold platforms accountable for spreading algorithmic disinformation. “Freedom of speech belongs to humans, not algorithms,” he said. “AI doesn’t have rights.”

A Financial System Run by Machines?

Harari also warned of a near-future in which AI systems—not humans—run the global economy. “Today, cryptocurrencies still depend on human belief. But tomorrow, we may see currencies and trades made by algorithms, for algorithms.”

Such a shift could lead to a “post-human financial system” that even governments can’t comprehend. “If your loan, your job, your country’s economy is controlled by an AI logic no human understands—what role does democracy play?”

The Breath of Hope

Still, Harari does not despair. He closed with a call for action rooted in biology and humility.

“Trust is the foundation of life. Every breath we take is a gesture of trust in the world around us. If we lose that, we collapse.”

His parting message to the students: Don’t carry the weight of the world alone. “Do your part. Others will do theirs. That’s how we move forward.”

Key Takeaways:

- AI is not human-like: It is an alien form of intelligence, fast-evolving, and inherently unpredictable.

- AI is an agent, not a tool: It can make decisions and even invent, with or without human oversight.

- Human distrust is the real risk: Competition, fear, and lack of cooperation are accelerating AI without safety nets.

- Truth vs. fiction: In the digital era, fiction spreads faster and wider; education must now teach discernment, not just facts.

- Digital society demands new ethics: From fake humans to AI-edited narratives, humanity must define new boundaries.

- Hope lies in humility and action: Each individual has agency; collective trust is still possible—if we begin rebuilding it now.